@show specformat natural

what is team? football team, basketball team, rugby team

@show specformat none

what is exchange? stock_exchange

This is the format how the multiple word concepts are stored into the memory, therefore the format to use with @show term.

@mode log [on/off] {folder + filename}

For save the activity (the same as is shown in the console) into the defined file.

By default this option is set to OFF.

If the flag is set to 'off' the file name and route is ignored.

Watch out, if the file can't be created or does not have writing permissions, no error message will be show in the console.

@mode tendfilter [number]

For filtering relations which tendency is lower than the indicated number on frame/set searches (question answering)

By default this option is set to zero that means no tendency filter is applied.

Example, in memory there is only the following knowledge:

cat IS nice 4

mammal IS nice 2

mammal IS big -2

so:

@mode tendfilter 2

what is nice? cat, mammal

@mode tendfilter 3

what is nice? cat

@mode tendfilter 5

what is nice? None

is cat nice? Unknown

is cat big? Unknown (negatives are also considered)

@mode tendfilter 0

is cat nice? Yes

is cat big? No

Note: when this filter is active, the confirmation mechanism is automatically disabled.

@mode conffilter [on/off]

Used on question answering to filtering the relations that has not been confirmed by the user

By default this option is set to off

Example, in memory there is only the following knowledge:

cat IS mammal 1 1+15 (source 1=administrator / 15=Contrast & Verified)

mammal IS nice 1 1+15

mammal IS large 1 1

so:

@mode deepsearch on

@mode conffilter off

is cat nice? Yes

is cat large? Yes

@mode conffilter on

is cat nice? Yes

is cat large? Unknown

Note: when this filter is active, the confirmation mechanism is automatically disabled.

@mode multfilter [on/off]

Used on question answering to filtering those relations that has not been mentioned by at least 2 different sources

By default this option is set to off

Example, in memory there is only the following knowledge:

cat IS mammal 1 4+7 (source 4=books / 7= web pages / 15 confirmed / 16 absolute true)

mammal IS nice 1 4+15

mammal IS large 1 4

mammal IS small 1 4+7

mammal IS pink -1 4+7

mammal IS yellow -1 16

so:

@mode multfilter on

is mammal nice? Unknown (confirmed source is not took in count)

is mammal large ? Unknown (only one source)

is mammal small? Yes (2 sources)

is mammal pink? No (2 sources)

is mammal yellow? No (absolutely true sources are never filtered)

Note: when this filter is active, the confirmation mechanism is automatically disabled.

@show help

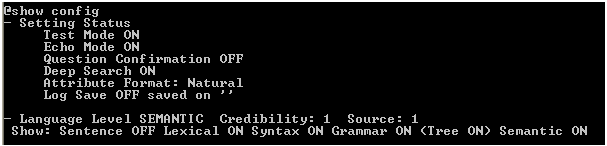

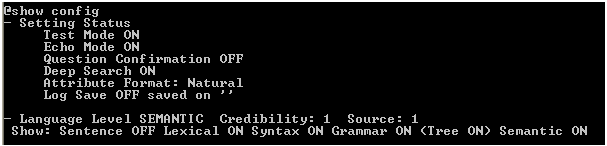

@show config

Show the current settings.

Essentially is a reflection of the status of the settings that can be modified with @mode orders

@show guessthres (0-100)

Parameter to limit the results of a question when guess mode is on.

The answer will return only those concepts which its fulfillment percentage are higher than this threshold.

0 is the default value.

@mode deepsearch on

@mode guessing on

@show guessthres 0

@show guessperc on

what is animal or pet and can hit and is feline? cat 100%, dog 75%, bear 50%

@show guessthres 60

what is animal or pet and can hit and is feline? cat 100%, dog 75%

@show guessthres 75

what is animal or pet and can hit and is feline? cat100%

Take in count, if the threshold is 100, the results obtained with the guessing mode set to on (approximation search), are the same than the guessing mode set to off (exact search), but quite inefficient.

@show guessmax (positive integer)

Only applies when guess mode is on. The answer will return the first "this parameter value" concetps.

This behaviour can be disabled, no elements will be removed from the answer, setting its value to zero.

0 is the default value.

@mode deepsearch on

@mode guessing on

@show guessmax 0

what is animal or pet and can hit and is feline? cat 100%, dog 75%, bear 50%

@show guessmax 2

what is animal or pet and can hit and is feline? cat 100%, dog 75%

@show guessmax 10

what is animal or pet and can hit and is feline? cat 100%, dog 75%, bear 50%

@show guessperc [on/off]

The answer will show the fulfilment percentage associated to every concept.

Only applies when guess mode is on.

On is the default value.

@mode deepsearch on

@mode guessing on

@show guessperc on

what is animal or pet and can hit and is feline? cat 100%, dog 75%, bear 50%

@show guessperc off

what is animal or pet and can hit and is feline? cat, dog, bear

@show term

Shows the syntactic data of the TERM (lexicon)

and the relations and characteristics learned about the described CONCEPT (frames / sets)

Examples:

@show term cat

CONCEPT [9] cat noun [4]

FRAME 15

parents: mammal(2) pet(-2)

features: nice(2){very} short(1)

attributes: leg(2){4} fur(2)

skills: run(1)[filed(2)/sky(-1)]

affeted: train(1)

SETS

@show term dog

CONCEPT [21] dog noun [11]

FRAME 4

skills: run(1){specially-very} jump(2) eat(1)[meat(1)]

adjnoun: animal

ofclauses: wood

SETS

Word with more than one syntactical type, and no frame:

@show term trained

CONCEPT [22] train adjective/verb

SETS

affecteds: cat

In case the term does not exist into the memory:

@show term nono

not found

You also can ask for attributes of concepts using the symbol %

concept%attribute{%attribute ...) E.g. leg of cat → cat%leg

@show term cat%leg

CONCEPT [19] cat%leg noun [9]

FRAMES 7

affecteds: leg(2)

features: short(2)

skills: run(2)

SETS

attributes: cat

Or even for specializations (multiple word concept) using the underline

noun_noun{_noun ...) E.g. stock exchange → stock_exchange

@show term Footaball_Team

CONCEPT [21] football_team noun [12]

FRAMES

parents: team(1)

SETS

Notes:

In case the features or skills has related adverbs, those will be show following the affected element as a dash separated list embraced by curly keys.

If any skill has any interaction, this will be show following the affected skill as a slash separated list embraced by brackets.

@load file {path/}filename

Process an entire text file, analyzing the sentences line by line.

Quite useful for avoid inputting manually a large number of sentences or processing large text.

Using the standard settings in a common personal computer, the application is be able to process an average of 6000 sentences per hour.

@load book {path/}filename

It processes a file chunking their content dot by dot.

If you provide a file with the following content:

hello

world.

bye

to everyone

It will be processed as:

| @load file |

@load book |

| hello |

hello world |

| world |

bye to everyone |

| bye |

|

| to everyone |

|

@load batch {path/}filename

Process every order and sentence wrote in the indicated file.

Useful to automate task, or establish an initial configuration.

@load ilcodes {path/}filename

Process a file with Internal Language Codes.

Allowing the insertion of knowledge directly to the memory, without the need of reprocessing the sentences using Internal Language.

Useful if you have preprocessed sentences (for example from the "internal_language.txt" output file from sentences processed in test mode);

or in case of need to recover the content of the memory in case the files of the system were corrupted.

@load ilc "internal language code"

For inputting knowledge directly to the memory without using the NLPkit.

Nevertheless it's highly recommended using the sentence processing and the question confirmation to apply this tasks.

![]()

![]()